Notes on Web Audio API

Jun 28, 2020During the development of Kraken SFU, I need some client app to make real time test. Due to the complications of WebRTC, it’s not easy to port the client of another SFU to use Kraken API. So we built our own and made it to a production ready Web App, Mornin.

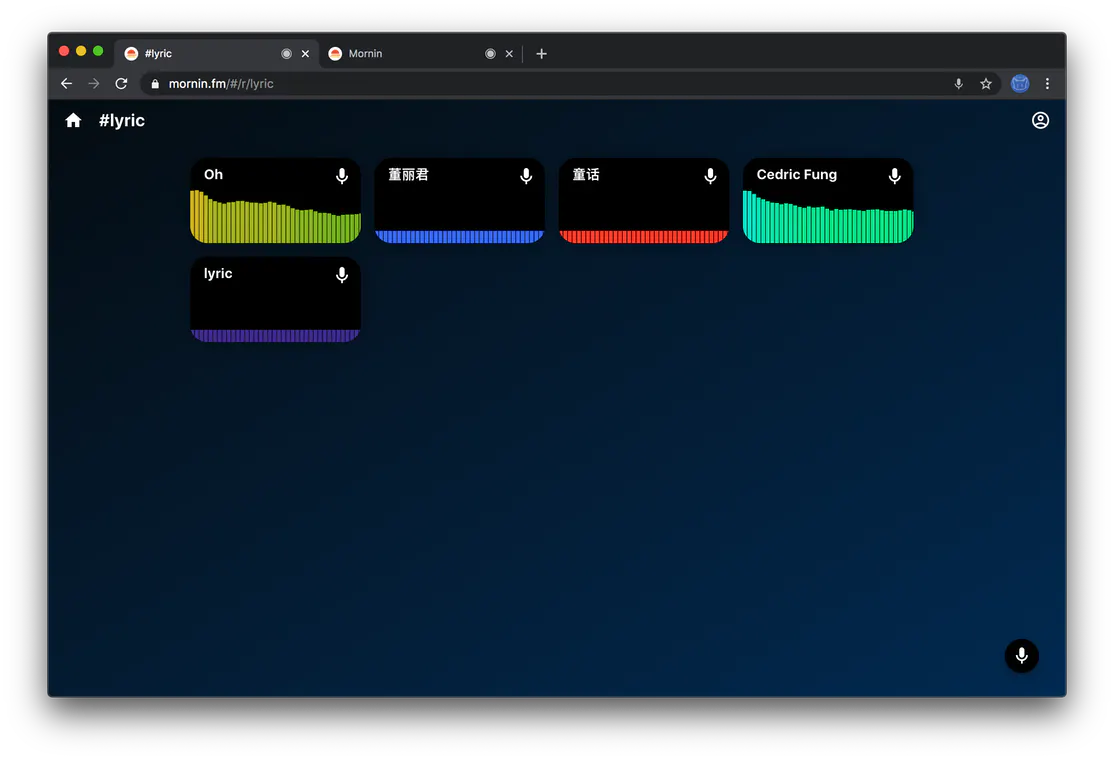

To facilitate audio data testing, we utilize a lot of Web Audio API to do audio visualizations, that allow us to watch the sound effects instead of relying on listening, and we could also do direct computations on audio stream data to report any errors. This testing purpose feature leads to a good looking Web App, and it gives us opportunity to do real life tests with about 100 participants in a room.

AudioContext

AudioContext is the first class to use Web Audio API, all modern desktop and mobile browsers have support.

const AudioContext = window.AudioContext || window.webkitAudioContext;

const audioCtx = new AudioContext();

AudioContext is some kind of pipeline, once you have an AudioContext initialized, it’s ready to connect an audio source, then some processing node to do any manipulation of the audio data and pass the result to another node, and the speaker is also a node which could output the audio stream.

NOTE: AudioContext is a resource intensive object, both mobile and desktop Safari limits maximum 4 AudioContext existing at the same time. It’s recommended to create one AudioContext and reuse it instead of initializing a new one each time, and it’s OK to use a single AudioContext for several different audio source and pipeline concurrently.

MediaStreamAudioSourceNode

For our WebRTC SFU testing, we will use RTCPeerConnection.ontrack to get the audio stream as our audioCtx audio source.

pc.ontrack = (event) => {

var stream = event.streams[0];

audioCtx.createMediaStreamSource(stream);

}

I reuse the same audioCtx object for all new streams, because AudioContext is more like a pipelines manager, and a new source node is the begin of a new pipeline. After a stream source created, it’s ready to be connected to other nodes, e.g. an AnalyserNode to read audio samples and visualize them, then output the identical stream to speaker, the destination.

NOTE: it’s pretty often to have many audio tracks in an audio stream, and MediaStreamAudioSourceNode will only pick a random track as the source, so it’s ambiguous to use MediaStreamAudioSourceNode. The best practice is to always ensure you have only one track by inspecting stream.getAudioTracks(). There is a new MediaStreamTrackAudioSourceNode, but only the latest Firefox supports it at the time.

References List